Reusable Python Code Modules, Part 12 - Data Transformation and Formatting

Optimizing Data Flow: Building Reusable Data Transformation and Formatting Modules

Data transformation and formatting are critical processes in backend development, ensuring data consistency, compatibility, and usability across different systems. This guide covers how to structure reusable data transformation and formatting modules in Python using Flask, manage data efficiently, and integrate common libraries.

Common Libraries and Tools

1. Pandas

Pandas is a powerful data manipulation and analysis library for Python

Key Features

DataFrame: Provides a DataFrame object for efficient data manipulation

Flexible Data Manipulation: Supports a wide range of data manipulation operations

Integration with Other Libraries: Easily integrates with other data analysis libraries

Rich Functionality: Includes extensive functionality for data cleaning, transformation, and formatting

2. Marshmallow

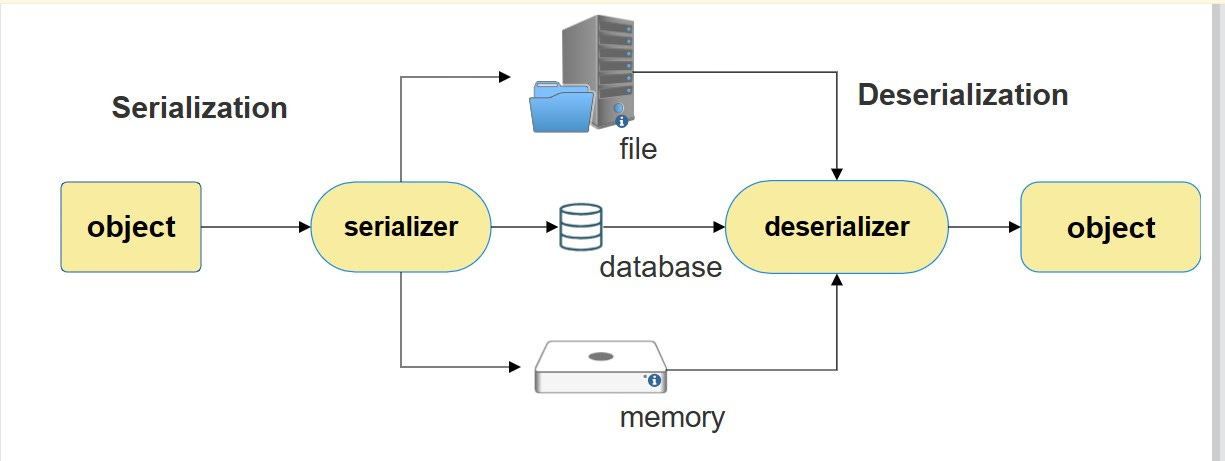

Marshmallow is an ORM/ODM/framework-agnostic library for object serialization and deserialization

Key Features

Schema-Based Validation: Validates and transforms input data based on defined schemas

Serialization and Deserialization: Converts complex data types to and from native Python data types

Customizable: Allows for custom serialization and deserialization methods

Integration with Flask: Works seamlessly with Flask for data validation and transformation

3. PyYAML

PyYAML is a YAML parser and emitter for Python

Key Features

YAML Parsing: Parses YAML files and converts them to Python objects

YAML Emission: Converts Python objects to YAML format

Flexible Configuration: Supports custom tags and types for parsing and emitting YAML

Integration: Easily integrates with other Python libraries for data manipulation

4. Pydantic

Pydantic is a data validation and settings management library using Python type annotations.

Key Features

Type Annotations: Uses Python type annotations for data validation and transformation

Automatic Parsing: Automatically parses and validates input data

Custom Validators: Supports custom validation methods

Integration with FastAPI: Works seamlessly with FastAPI for data validation and serialization

Comparison

Pandas: Best for extensive data manipulation and analysis tasks with flexible data handling.

Marshmallow: Ideal for schema-based data validation and transformation with serialization and deserialization support.

PyYAML: Suitable for applications needing YAML parsing and emission with flexible configuration.

Pydantic: Best for type-based data validation and transformation with support for custom validators.

Examples

Example 1: Pandas

Setup:

$ pip install pandasConfiguration:

import pandas as pd

def transform_data(data):

df = pd.DataFrame(data)

# Example transformation: Add a new column

df['total'] = df['quantity'] * df['price']

return df

data = [

{'product': 'A', 'quantity': 10, 'price': 1.5},

{'product': 'B', 'quantity': 5, 'price': 2.0},

]Usage:

transformed_data = transform_data(data)

print(transformed_data)Example 2: Marshmallow

Setup:

$ pip install marshmallowConfiguration:

from marshmallow import Schema, fields, post_load

class ProductSchema(Schema):

product = fields.Str(required=True)

quantity = fields.Int(required=True)

price = fields.Float(required=True)

total = fields.Float()

@post_load

def calculate_total(self, data, **kwargs):

data['total'] = data['quantity'] * data['price']

return data

product_schema = ProductSchema(many=True)

data = [

{'product': 'A', 'quantity': 10, 'price': 1.5},

{'product': 'B', 'quantity': 5, 'price': 2.0},

]Usage:

transformed_data = product_schema.load(data)

print(transformed_data)Example 3: PyYAML

Setup:

$ pip install pyyamlConfiguration:

import yaml

def parse_yaml(yaml_str):

return yaml.safe_load(yaml_str)

def emit_yaml(data):

return yaml.safe_dump(data)

yaml_str = """

products:

- product: A

quantity: 10

price: 1.5

- product: B

quantity: 5

price: 2.0

"""Usage:

parsed_data = parse_yaml(yaml_str)

print(parsed_data)

yaml_output = emit_yaml(parsed_data)

print(yaml_output)Example 4: Pydantic

Setup:

$ pip install pydanticConfiguration:

from pydantic import BaseModel, Field, validator

from typing import List

class Product(BaseModel):

product: str

quantity: int

price: float

total: float = Field(default=0)

@validator('total', always=True)

def calculate_total(cls, v, values):

return values['quantity'] * values['price']

class ProductList(BaseModel):

products: List[Product]

data = {

"products": [

{"product": "A", "quantity": 10, "price": 1.5},

{"product": "B", "quantity": 5, "price": 2.0}

]

}Usage:

product_list = ProductList(**data)

print(product_list.json())