A Beginner’s Introduction to Professional Database Design

Understanding the Fundamentals of Effective Database Structuring

Database design is the process of creating a well-structured database that efficiently (1) stores and (2) retrieves data. Databases have formal / technical properties that a designer should strive to achieve in order to enforce data integrity constraints that are desired and expected from a set of business requirements.

A well-designed database ensures that data integrity is enforced and provides great performance and scalability, especially in the flexibility of adding or removing independent applications that communicate with the underlying data.

This is a shorter guide, compared to the other theoretical and architectural database posts, which focuses on outlining the key concepts in database design. It allows a new reader or experienced developer to thoughtfully think through these considerations as they begin or continue their understanding into formal database theory and design.

Key Concepts

Data Modeling

Normalization

Indexing

Data Modeling

Data modeling involves defining the structure of your database, including tables, relationships between tables, and constraints. It helps in organizing and representing data in a way that meets the requirements of your application.

Data modeling involves:

Entities and Attributes

Entities represent objects or concepts

Attributes are the properties that describe them

For example, in a customer database, an entity could be "Customer" with attributes like (Name, Email, Date of Birth, …)

Relationships

Relationships define how entities relate to each other

For example, a "Customer" entity might have a relationship with an "Order" entity, indicating that customers place orders

Diagrams

Entity-Relationship Diagrams (ERDs) are used to visually represent the data model

To recap:

A data model is nothing more than a structured way to model / represent an underlying system, given how relational databases store and retrieve data

An ER diagram is a visual representation of a data model that will allow us to take a model and eventually convert it into relations (tables) inside our database system

Types of Data Models

Like the design of any complex piece of software, the database design process has several key steps

In the database design process, we typically begin with business requirements that must be converted into a set of entities and relationships, not only for our database design but also for the applications that will have user or system interactivity.

Converting requirements into a final design requires various iterations and the use of data models helps us move towards the final specification in a systematic manner. This is where different types of data models come in to achieve this incremental modeling process.

Types

Conceptual Data Model

High-level overview of business concepts and relationships

We’ll consider visual and structured data diagrams as well as the final relations and properties

Logical Data Model

More detailed, without focusing on how the data is physically implemented

We’ll strongly consider the data integrity mechanisms to ensure our data is always correct and sound

Physical Data Model

Specific to the database management system (DBMS)

Details the tables, columns, indexes, and relationships

We consider what database system we’ll use as well as its capabilities

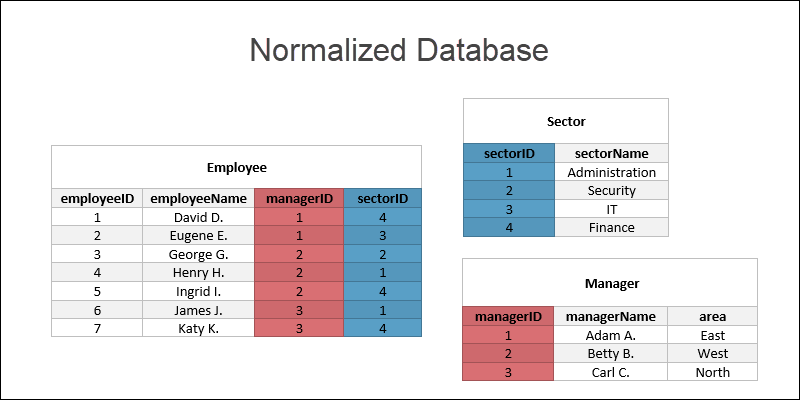

Normalization

Normalization is a technique used to minimize redundancy

The technique involves reorganizing your tables and fields in a way that minimizes duplication and dependencies between fields - for example, storing the age and date of birth, both, in a database would be considered redundant and normalization helps us (1) identify these inefficiencies and (2) systematically address them such that we arrive at the best database implementation

Normal Forms

Normal forms are ‘levels’ of achievement for a certain amount of normalization that has been achieved

There are different normal forms, which come with a set of guidelines that logically check if a database design is within that normal form - the benefit of a database being classified into a normal form is that it guarantees that a set of integrity problems will not exist at that level

Recipes / algorithms allow us to jump between one normal form into another, either breaking up our tables further (known as decomposition) or bringing our tables back together (known as synthesis)

The different normal forms include

First Normal Form (1NF)

Ensures each column contains atomic (indivisible) values

Each entry in a column is of the same data type

Second Normal Form (2NF)

Meets all the requirements of 1NF

Ensures that all non-key attributes are fully functionally dependent on the primary key

Third Normal Form (3NF)

Meets all the requirements of 2NF

Ensures that all attributes are not only fully dependent on the primary key but also independent of each other (no transitive dependency)

Boyce-Codd Normal Form (BCNF)

A stricter version of 3NF

Addresses certain types of anomalies not covered by 3NF

Higher Normal Forms

4NF and 5NF (among others) - address multivalued dependencies and join dependencies

Higher normal forms reduce redundancy even further

Normalization Example 1 - Passes

Below is a simple database for managing a library's books, authors, and publishers

Entities

Books

Authors

Publishers

Attributes

Books

BookID (Primary Key)

Title

AuthorID (Foreign Key)

PublisherID (Foreign Key)

YearPublished

Authors

AuthorID (Primary Key)

AuthorName

Publishers

PublisherID (Primary Key)

PublisherName

Relationships

Each book has one author

Each book has one publisher

Each author can write multiple books

Each publisher can publish multiple books

Normalization Steps

First Normal Form (1NF)

1NF states that

Each column contains atomic values

Each record entry in a column is of the same data type

Each table must have a primary key

Given the tables defined below, this meets the three conditions above:

Each column contains an atomic data type

Each record entry insertion is enforced by the database system and guarantees that we always have the same data type per column

Each table defined has a primary key

CREATE TABLE Books (

BookID INT PRIMARY KEY,

Title VARCHAR(255),

AuthorID INT,

PublisherID INT,

YearPublished INT

);

CREATE TABLE Authors (

AuthorID INT PRIMARY KEY,

AuthorName VARCHAR(255)

);

CREATE TABLE Publishers (

PublisherID INT PRIMARY KEY,

PublisherName VARCHAR(255)

);Second Normal Form (2NF)

2NF states that:

All non-key attributes are fully functionally dependent on the primary key

No partial dependency of any column on the primary key

Given the same database outline in 1NF:

Key attributes in our example refer to the primary keys, since we don’t define any other UNIQUE/key column

Each non-key attribute in the Books table is dependent on the entire primary key (BookID)

We can guarantee this because all attributes are directly related to the record stored - it would not make sense to have any of these fields independently / separately stored, since they’re all related - and the reason they’re dependent on the primary key is because the primary key serves as a unique identifier

Third Normal Form (3NF)

3NF states that:

All attributes are not only fully dependent on the primary key but also independent of each other

This means that there are no transitive dependencies

In the example carried forward so far:

There are no transitive dependencies as attributes

Attributes, Title, AuthorID, PublisherID, and YearPublished, are all directly dependent on BookID

Normalization Example 2 - Fails

In this second example, we look at a different database that comes with certain normalization issues in order to illustrate what these issues might look like

We’ll look at a single table called Orders

Attributes

OrderID (Primary Key)

CustomerName

ProductID

ProductName

Quantity

TotalPrice

OrderDate

Design and Normalization Issues:

CREATE TABLE Orders (

OrderID INT PRIMARY KEY,

-- Multiple customer names in a single field

CustomerName VARCHAR(255),

-- Multiple product IDs in a single field

ProductID VARCHAR(255),

-- Multiple product names in a single field

ProductName VARCHAR(255),

Quantity INT,

TotalPrice DECIMAL,

OrderDate DATE

);First Normal Form (1NF)

The Orders table has multiple values in the CustomerName field

Example - John Doe, Jane Smith

A customer name is not atomic, since it refers to a combination of the first, middle and last names

ProductID and ProductName are not atomic if multiple products can be in a single order

Both of these observations violate the 1NF rule of atomicity

Second Normal Form (2NF)

Assuming we fix the 1NF issue by separating products into their own table:

There is a partial dependency if we have combined keys (e.g., OrderID + ProductID)

If TotalPrice is dependent on ProductID and Quantity, it should be in a separate table to ensure full functional dependency

CREATE TABLE Orders (

OrderID INT,

ProductID INT,

Quantity INT,

TotalPrice DECIMAL,

OrderDate DATE,

PRIMARY KEY (OrderID, ProductID)

);

CREATE TABLE Customers (

OrderID INT,

CustomerName VARCHAR(255)

);Third Normal Form (3NF)

Assuming we fix the 2NF issue by properly linking Orders with Customers:

If ProductName is included in Orders, it creates a transitive dependency (ProductID -> ProductName)

CREATE TABLE Orders (

OrderID INT PRIMARY KEY,

CustomerID INT,

OrderDate DATE

);

CREATE TABLE OrderDetails (

OrderID INT,

ProductID INT,

Quantity INT,

TotalPrice DECIMAL,

PRIMARY KEY (OrderID, ProductID)

);

CREATE TABLE Customers (

CustomerID INT PRIMARY KEY,

CustomerName VARCHAR(255)

);

CREATE TABLE Products (

ProductID INT PRIMARY KEY,

ProductName VARCHAR(255)

);Summary of Normalization Examples

The first design example meets the first four normal forms, ensuring data integrity and reducing redundancy.

The second design example demonstrates common issues at each normalization step, highlighting partial dependencies, transitive dependencies, and other violations.

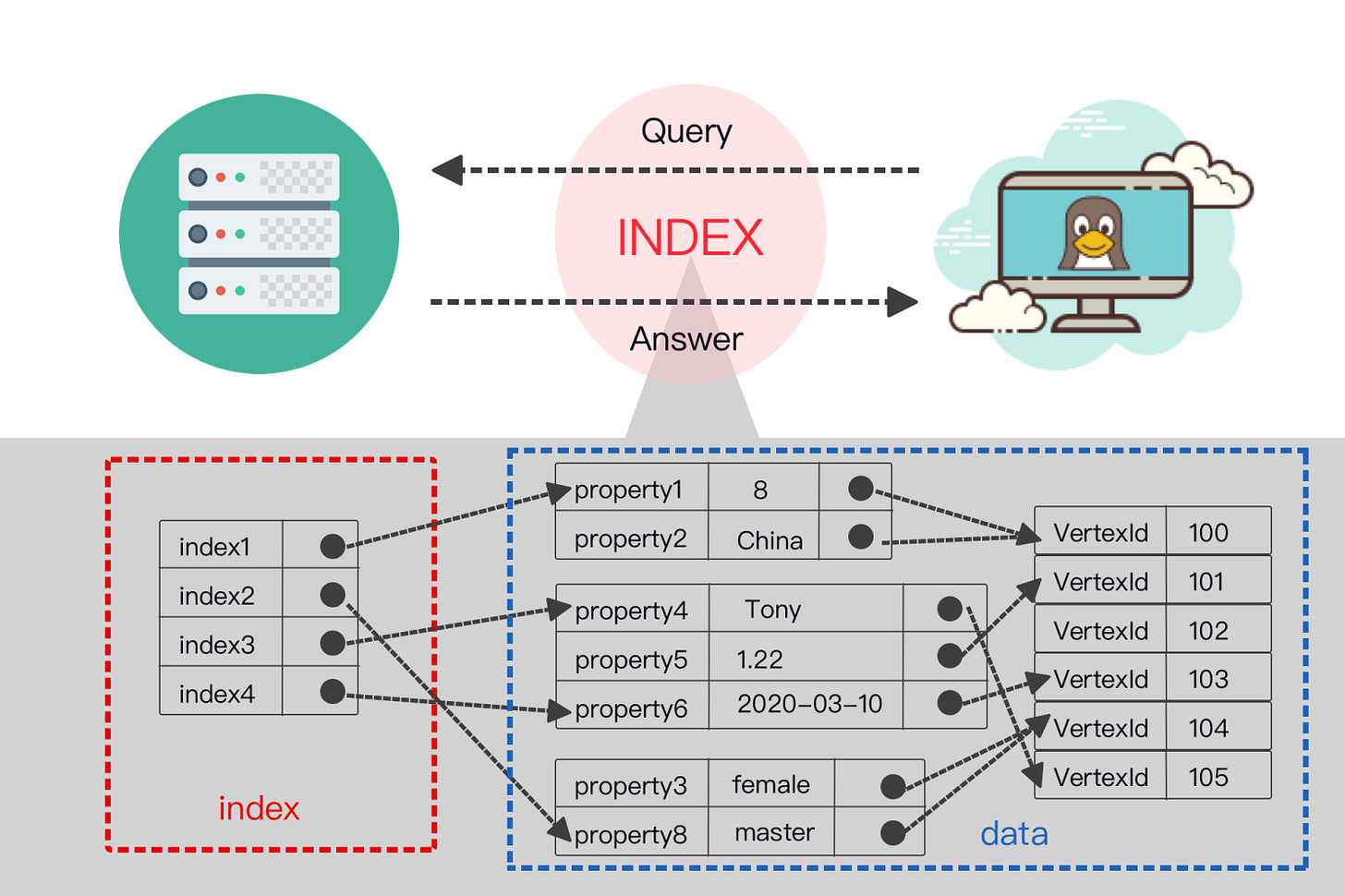

Indexing

Indexing is a database optimization technique

Indexing is a technique that improves the speed of data retrieval operations on a database table

As you know in software design, nothing is free - no decision comes without a trade-off:

In order to get better speed, we must sacrifice (1) storage size and (2) maintenance work

By getting better speed, we increase the cost of additional storage and maintenance overhead

Types of Indexes

Primary Index

Automatically created by the database system when a primary key is defined

A primary index uniquely identifies each record in the table, using the primary key

Secondary Index

Created manually and on non-primary key columns

Its purpose is to improve query performance

Secondary indices can be unique or non-unique

Clustered Index

Determines the physical order of data in the table

There can be only one clustered index per table

Non-clustered Index

Does not alter the physical order of the table

These can be created on any column, allowing multiple non-clustered indexes per table

Index Structures

Indices are represented in software as different types of data structures. Given that indices are used in order to achieve performance / speed-related benefits, unique data structures must be used to implement these benefits.

The most prevalent data structures used to represent index structures are outlined below:

B-tree Indexes

A type of Tree data structure

These are balanced tree structures that maintain sorted data and allow searches, insertions, deletions, and sequential access

Hash Indexes

Hash indices are a data structure that utilize a hash function to map search keys to corresponding records, providing fast data retrieval for exact match queries

Bitmap Indexes

Bitmap indices are a data structure that utilize bitmaps

These are efficient for columns with a limited number of distinct values, often used in data warehousing

Pros and Cons of Indexing

Pros

Improved Query Performance - Faster retrieval of records, especially for large datasets

Efficient Sorting - Speeds up ORDER BY operations

Reduced I/O Operations - Decreases the number of disk accesses required for query execution

Cons

Storage Overhead - Additional space is required to store indexes

Maintenance Cost - Indexes need to be updated when data is inserted, updated, or deleted, which can impact performance

Design Steps

In our final section of this guide, we’ll look at a concrete example of taking a database design from a purely conceptual idea all the way through until the end, where we have a functioning database. The steps that we’ll go through are the first three steps of the six steps outlined below. Given that the later three steps are advanced topics, these will be reserved for the more advanced and technical blog posts in database theory and design.

Steps

Requirement analysis

Understand what data is to be stored

What apps must be built on top

What operations are most frequent

Conceptual database design

Develop a high-level description of the data to be stored in the database and its constraints

This step involves the ER model (semantic model)

Logical database design

Convert the conceptual database design into a database schema in the data model of the DBMS

Converting the ER schema into a relational database schema

Schema refinement

Analyze the collection of relations in the relational database schema to identify problems and refine it

Theory of normalization - relations-restructuring

Physical database design

Performance criteria and requirements

Clustering of tables and creation of views

Application and security design

Design Example

Designing a Library Database

Let's apply what we've learned to design a simple library database

Step 1 - Conceptual Model (ER Diagram)

The first step in designing a database is to create a conceptual model using an ER diagram

This diagram visually represents the entities and relationships of a database

Entities and Relationships

Books

Represents the collection of books in the library

Attributes: book_id, title, author, published_year

Borrower

Represents the member who borrow books from the library

Attributes: member_id, name, email

Loans

Represents the records of books being borrowed and returned

Attributes: loan_id, book_id, member_id, loan_date, return_date

Relationships

Books and Loans

A book can be loaned to multiple members over time

A loan record refers to one book

Members and Loans

A member can borrow multiple books over time

A loan record refers to one member

In the ER diagram:

Books and Members have a many-to-many relationship through Loans.

This many-to-many relationship is resolved by creating the Loans entity, which acts as a junction table.

Step 2 - Logical Schema (SQL)

After defining the conceptual model, the next step is to translate it into a logical schema using SQL

The logical schema defines the structure of the database in terms of tables, columns, data types, and relationships

SQL Commands

The books table stores information about each book in the library

The members table stores information about each library member

The loans table records each instance of a book being borrowed by a member

Includes the loan and return dates

Foreign keys (book_id, member_id) in the loans table create relationships with the books and members tables

CREATE TABLE books (

book_id INT PRIMARY KEY,

title VARCHAR(100) NOT NULL,

author VARCHAR(50) NOT NULL,

published_year INT

);

CREATE TABLE members (

member_id INT PRIMARY KEY,

name VARCHAR(50) NOT NULL,

email VARCHAR(100) UNIQUE

);

CREATE TABLE loans (

loan_id INT PRIMARY KEY,

book_id INT,

member_id INT,

loan_date DATE,

return_date DATE,

FOREIGN KEY (book_id) REFERENCES books (book_id),

FOREIGN KEY (member_id) REFERENCES members (member_id)

);Step 3 - Physical Design Considerations

Physical design involves optimizing the database for performance, storage, and scalability

Key considerations include indexing, data types, and constraints

Indexing

Indexes on Foreign Keys:

Adding indexes on book_id and member_id columns in the loans table can significantly speed up queries that join the loans table with the books and members tables

CREATE INDEX idx_book_id ON loans(book_id);

CREATE INDEX idx_member_id ON loans(member_id);Data Types and Constraints

Choose appropriate data types for each column to ensure efficient storage and retrieval

For example

VARCHAR for strings

INT for integers

Use constraints to enforce data integrity

NOT NULL to ensure that critical fields (e.g., title, name) are always populated

UNIQUE to ensure that email addresses are unique across members

CREATE TABLE books (

book_id INT PRIMARY KEY,

title VARCHAR(100) NOT NULL,

author VARCHAR(50) NOT NULL,

published_year INT CHECK (published_year > 0)

);

CREATE TABLE members (

member_id INT PRIMARY KEY,

name VARCHAR(50) NOT NULL,

email VARCHAR(100) UNIQUE NOT NULL

);

CREATE TABLE loans (

loan_id INT PRIMARY KEY,

book_id INT,

member_id INT,

loan_date DATE NOT NULL,

return_date DATE,

FOREIGN KEY (book_id) REFERENCES books (book_id),

FOREIGN KEY (member_id) REFERENCES members (member_id)

);

CREATE INDEX idx_book_id ON loans(book_id);

CREATE INDEX idx_member_id ON loans(member_id);Best Practices in Database Design

In this final section, we’ll quickly analyze three core components of best practices related to database design:

Data Integrity

Performance Optimization

Scalability and Flexibility

Data Integrity

Data integrity guarantees the accuracy, consistency, and reliability of data stored in a database

Here are some best practices:

Use Constraints

Example SQL constraints - NOT NULL, UNIQUE, and FOREIGN KEY

Employ constraints to enforce rules on data manipulation

NOT NULL ensures essential fields are always populated

UNIQUE prevents duplicate entries

FOREIGN KEY maintains referential integrity between related tables

Validation Rules

Implement validation rules at the database level to ensure that only valid data is stored

This reduces the risk of errors and inconsistencies

Performance Optimization

Optimizing database performance involves designing and tuning the database to ensure efficient query execution and data retrieval. Key practices include:

Indexing

Design indexes carefully based on query patterns and workload

Indexes help speed up data retrieval operations by allowing the database engine to quickly locate relevant rows

Identify frequently queried columns and create indexes on them

Consider composite indexes for queries that involve multiple columns

Regularly monitor and optimize indexes to ensure they continue to provide optimal performance as data volumes and query patterns change

Query Optimization

Write efficient SQL queries that minimize resource consumption and maximize throughput

Use query execution plans and profiling tools to identify and resolve performance bottlenecks

Scalability and Flexibility

Normalization

Apply normalization techniques to reduce redundancy and improve data integrity

Normalized databases are typically easier to scale and modify

Partitioning

Partition large tables into smaller, more manageable segments based on criteria such as range, list, or hash - this can improve query performance and facilitate data distribution on devices/regions

Replication

Implement database replication to create redundant copies of data across multiple servers

Replication enhances availability and fault tolerance while also supporting read scaling by offloading read operations to replica servers

Flexible Schema Design

Use a flexible schema design that accommodates evolving application requirements

JSON or XML can provide flexibility in storing diverse data formats

Example

-- Example of creating a partitioned table

CREATE TABLE sales (

sale_id INT PRIMARY KEY,

sale_date DATE,

amount DECIMAL(10, 2),

...

)

PARTITION BY RANGE (YEAR(sale_date)) (

PARTITION p1 VALUES LESS THAN (2020),

PARTITION p2 VALUES LESS THAN (2021),

PARTITION p3 VALUES LESS THAN (2022),

PARTITION p4 VALUES LESS THAN MAXVALUE

);Next Steps

Deep Dive into Database Design - Part 1

This is an extended guide on the theoretical and academic approach to database design. While databases have had an explosion of adoption in the modern world and their implementation transmitted down to software developers building sophisticated web applications, mobile applications, APIs, and other systems requiring storage capabilities, databases had h…

Deep Dive into Database Design - Part 2

This is the second part of a two-part guide on database design. Recall that this guide is split into two sections: Part 1 covers database systems, database design and the relational model. Examples are provided to explore the different aspects of these topics in detail.

Modern Database Options

Alright, let's talk about modern databases. The landscape of data storage and management has evolved a lot, and if you're still sticking to just SQL, you're missing out. This post will break down some of the latest technologies, why they matter, and how to use them. We'll cover SQL databases, NoSQL options, and some other cool stuff that's making waves.